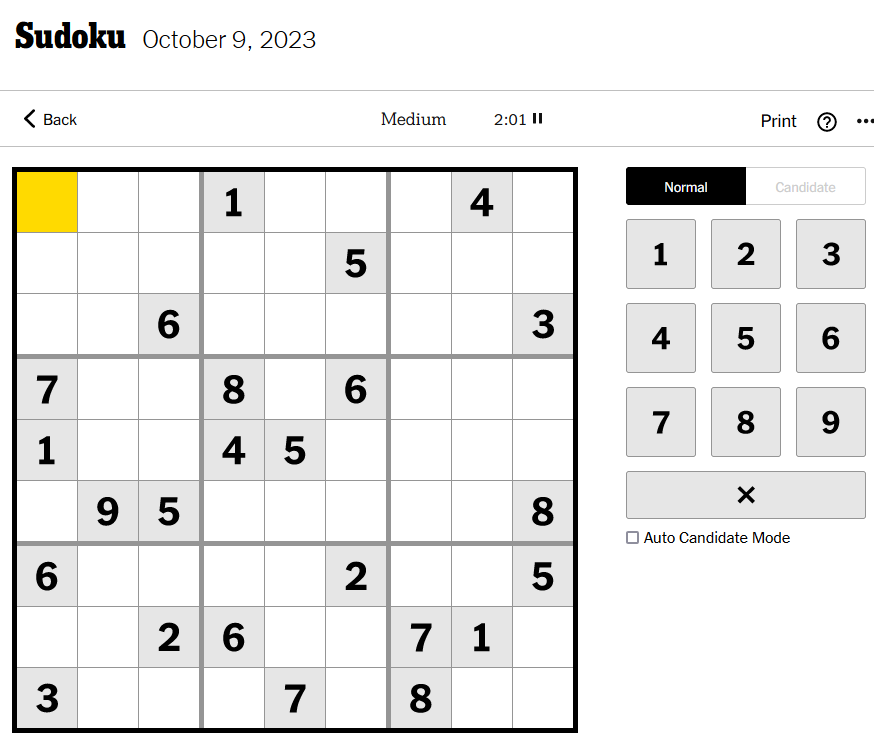

I’ve been playing the New York Times Sudoku on occasion, and it takes me almost an hour to solve the medium puzzle.

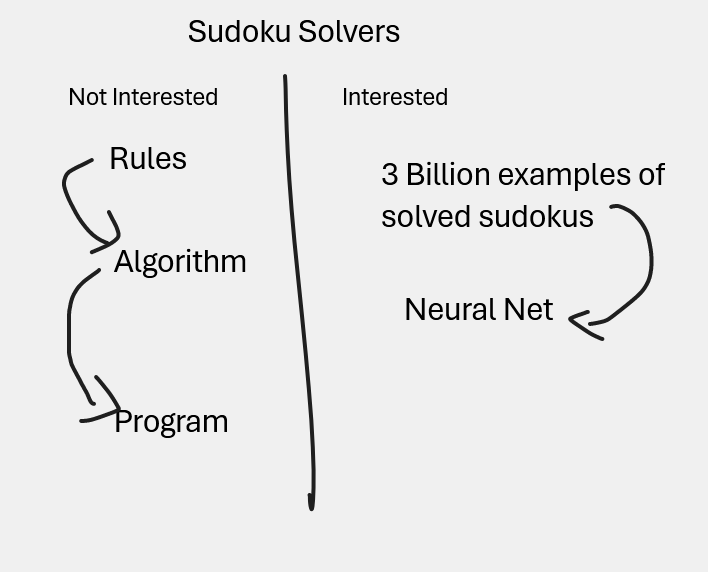

Fun isn’t fun until its ruined, so as is de rigueur, I started kicking around the idea of getting a computer to solve sudoku puzzles. Of course sudoku-solvers already exist on the internet, so I won’t be treading any new ground for humanity – but I’m interested in a subset of those solvers. Specifically, ones you don’t have to “teach” the rules to:

Baby Sudoku For Baby Minds

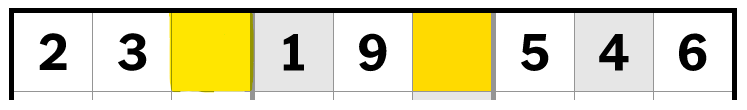

My first concern was whether a simple feed-forward neural network could even sort out the easiest problem to solve in sudoku – which is when you have numbers in a row or column and only 1 is missing:

Even if you’ve never played sudoku you can probably deduce what number goes in the box. I was not convinced this was particularly easy for a simple neural network to figure out. I mean, how would you set up a problem like this for any ML algorithm? I decided to do it like this:

- Treat the numbers as categories. The numbers could be anything – and their actual relationships (2 being 1+1, 9 being 3 x 3) are irrelevant to the puzzle.

- Make the position of the numbers irrelevant. You can imagine that you don’t need the missing number to be in any particular box to solve the above puzzle.

- One-hot encode the categories. If you don’t what that means – you can read here (or ignore this point).

I wrote a small block of code to generate the above problem 20,000 times (with a random number from 1 to 9 missing each time).

import pandas as pd

import numpy as np

# %%

categories = ['1','2','3','4','5','6','7','8','9']

predictors = categories[:-1]

def generateSingleLineSudoku(samples):

df_list = []

for i in np.arange(0,samples):

df_list.append(

np.random.choice(

np.arange(1,10,1),size=9,replace=False

)

)

return pd.DataFrame(

df_list,

columns=predictors+['target']

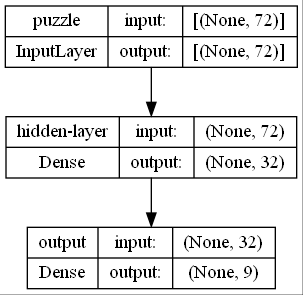

)Then I one hot encoded the predictors (the 8 numbers shown) and the target (the 9th number – aka the thing we’re trying to predict), and trained a single-hidden-layer neural network on 10,000 of the predictors and target:

All the “Nones” refer to batch sizes, which aren’t important here. The critical thing to see is that the model takes 72 values and outputs 9, Which sounds like 9 times too many given that I claimed it’s supposed to only predict one missing number given 8 other numbers. The devil-in-the-details is the one-hot encoding – so each of the 9 outputs from the model represents a probability that particular number is missing.

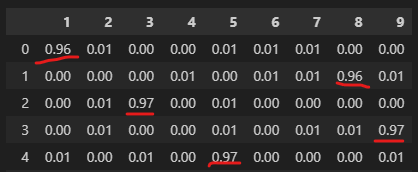

Here are 4 different predictions (rows) with columns 1 through 9 where each cell represents the probability that the missing number is the column number.

These match the actual missing numbers for these rows. Suffice to say this model is very good at predicting the missing number. Upon further reflection this isn’t too surprising. The model only has to “see” 9 unique examples in its training data to have seen the entire universe of possible examples – and I trained it on 10,000 examples.

Interestingly, if I remove a second number and ask the neural net to predict what is missing from this puzzle:

It correctly predicts that both 7 AND 8 are missing! So it is has appropriately generalized to a slightly more complex problem.

Toddler Sudoku for Toddler Minds

The next step is to train a model that can solve a sudoku problem with more constraints. A small sudoku board is suitable. I figured I would use the number of possible solutions as a guide to decide how large of a board to use but it turns out the math behind computing the total number of possible solutions is complex. A 9×9 sudoku board has \(6.671×10^{21}\) solutions. I wasn’t able to find a quick reference for a table or function that says the total number of solutions given the size of the board. I know a 1×1 board has 1 possible solution, a 2×2 board has only 2 possible solutions, a 3×3 board has 6. This is growing like factorial – but a 9×9 board has far more than 9! solutions. I’ll guess that a 4×4 board is large enough to create some interesting unseen problems for my neural net.

The biggest change from the previous problem is that in the new problem – position matters – so the network will have to learn to do more than just pick the missing numbers. The difficulty of a sudoku puzzle can also vary based on how filled out it is at the start. In general, the more filled out, the easier the puzzle. I generated a dataset of increasingly difficult puzzles and trained a new neural network to try and solve 4×4 sudokus.

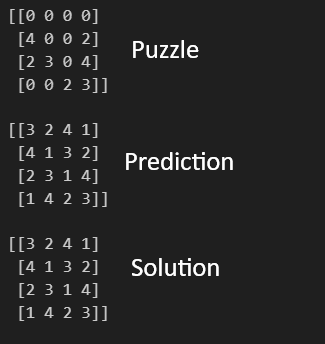

After 2 minutes of training – the network could solve puzzles with 60% of the cells missing with 94% accuracy. Though my accuracy measure does not account for the problem of sudoku’s with multiple possible solutions. Here is a situation in which the network found a valid solution that differs from the prescribed solution:

Original Sudoku For Spoon Bending Minds

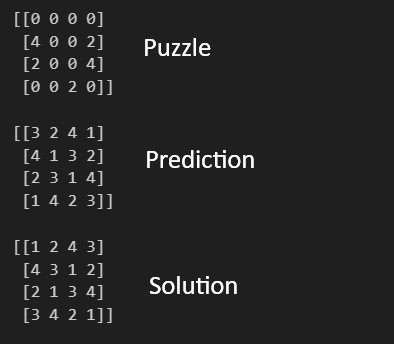

With proof that the neural network could learn the necessary information to solve small sudoku puzzles, I scaled the network up to see how it would perform on bigger puzzles, and unsurprisingly it performs worse. Some obvious improvements could be found using more data and a bigger network. I found some issues with the python package I was using to generate new puzzles and solutions (it was generating the same solutions many times over). However – simple 9×9 puzzles were solvable for the trained network:

I highlighted the missing content in yellow, and the correct predictions made by the model in green.

The next step is to generate more unique puzzles and possibly increase the complexity of the network so that I may train the network on more difficult problems – eventually leading to its ability to solve New York Time’s difficult puzzles.

Until next time.